Understanding Artificial Intelligence: Large Language Models

What is this?

It is a pair of training and awareness sessions for staff in the Arts, Design, and Humanities faculty at De Montfort University to increase understanding of how one kind of Artificial Intelligence (AI), the so-called Large Language Models, work. These Large Language Models are the technology that has recently improved dramatically in its performance at holding a written conversation with the user, with the most famous instance being the ChatGPT software from the group OpenAI. Using hands-on learning techniques and simple textual worked examples, the core technical details of Large Language Models that will be explained are Markov Chains, word embeddings, neural networks, and machine learning.

It is a pair of training and awareness sessions for staff in the Arts, Design, and Humanities faculty at De Montfort University to increase understanding of how one kind of Artificial Intelligence (AI), the so-called Large Language Models, work. These Large Language Models are the technology that has recently improved dramatically in its performance at holding a written conversation with the user, with the most famous instance being the ChatGPT software from the group OpenAI. Using hands-on learning techniques and simple textual worked examples, the core technical details of Large Language Models that will be explained are Markov Chains, word embeddings, neural networks, and machine learning.

To understand what AI is capable of and how it may affect what we do in Higher Education, it is necessary to have some grounding in the technicalities of how it works. But most of the available guides to this topic are either excessively trivializing or overly technically detailed. This training will present the outline operation of the Large Language Models and enough of the technical detail to enable colleagues to appreciate not only the reasons why these new forms of Al perform so much better than previous versions, but also just what improvements we might expect to see in the near future.

Making sense of the four key ideas of Markov Chains, word embeddings, neural networks, and machine learning will equip attendees at the sessions with knowledge of the technical details that enable them to follow current debates about the dangers and likely opportunities of Al. Having developed smallscale but working Al models of their own, attendees will explore what happens when these models are scaled up to use more parameters, larger training datasets, and greater computing power. The unexpected effectiveness of scaling-up underpins the recent rapid progress in this part of the field of AI, and we will end by briefly considering the social, economic, and political aspects of how this is likely to affect AI's impact on teaching and research in Higher Education.

Making sense of the four key ideas of Markov Chains, word embeddings, neural networks, and machine learning will equip attendees at the sessions with knowledge of the technical details that enable them to follow current debates about the dangers and likely opportunities of Al. Having developed smallscale but working Al models of their own, attendees will explore what happens when these models are scaled up to use more parameters, larger training datasets, and greater computing power. The unexpected effectiveness of scaling-up underpins the recent rapid progress in this part of the field of AI, and we will end by briefly considering the social, economic, and political aspects of how this is likely to affect AI's impact on teaching and research in Higher Education.

No prior technical expertise or understanding of AI is required: these are sessions aimed specifically at the interested amateur looking to broaden her knowledge.

Registration

BOOKING IS ESSENTIAL because the hands-on apect of this training limits us to just 12 places. Priority will be given to people in the School of Humanities and Performing Arts and then to people in the wider Faculty of Arts, Design, and Humanities. Spots not taken by people in those groups will be made available to people from other Faculties. In the first instance please go ahead and book no matter where you are from and we will confirm your acceptance at the end of May 2024.

When and Where

The two sessions will take place in person on the De Montfort University Leicester campus on 20 June and 11 July 2024, in the Minimal Computing Lab in the Clephan Building (CL0.30) and the modern computing lab in Clephan Building (CL1.32e).

The Programme

Note that the second day of training builds on the first, but it is possible to attend only one day or the other to still get some sense of our topic.

20 June 2024

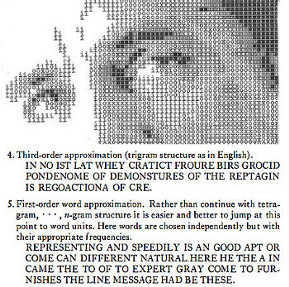

1-2.15pm Introduction, then Language Markov Chains led by Gabriel Egan

2.15-2.30pm Tea and Coffee Break

2.30-4pm Word Embeddings (the word2vec algorithm) led by Paul Brown

11 July 2024 Notice that we start in CL1.32e

1-1.30pm Hands-On with Word Embeddings led by Paul Brown in CL1.32e

1.30-2.30 pm Neural Networks and ChatGPT As a Spreadsheet led by Gabriel Egan in CL1.32e

2.30-2.45pm Tea and Coffee Break in CL0.31

2.45-3.45pm How Machines Learn led by Nathan Dooner in CL0.30

3.45-4pm Conclusions and discussion in CL0.30

Who's doing the training?

The team consists of three scholars who have, between them, 40 years of experience in the use of computers in textual research. Here they are:

Gabriel Egan is Professor of Shakespeare Studies and Director of the Centre for Textual Studies at De Montfort University and one of the four General Editors (with Gary Taylor, Terri Bourus, and John Jowett) of the New Oxford Shakespeare, of which the Modern Critical Edition, the Critical Reference Edition and the Authorship Companion appeared in 2016; the remaining two volumes will appear in 2025. He co-edits the academic journal Theatre Notebook for the Society for Theatre Research. He teaches computational approaches to literary-historical textual analysis and the art of letter-press printing.

Gabriel Egan is Professor of Shakespeare Studies and Director of the Centre for Textual Studies at De Montfort University and one of the four General Editors (with Gary Taylor, Terri Bourus, and John Jowett) of the New Oxford Shakespeare, of which the Modern Critical Edition, the Critical Reference Edition and the Authorship Companion appeared in 2016; the remaining two volumes will appear in 2025. He co-edits the academic journal Theatre Notebook for the Society for Theatre Research. He teaches computational approaches to literary-historical textual analysis and the art of letter-press printing.

Paul Brown is a freelance consultant on computational methods for textual scholarship. He has written software to help determine the authorship of early modern plays and has published non-technical explanations to accompany the code. He teaches undergraduates how to analyse literary and historical data with computers and has taught many sessions to research students and academics on how to program and on text-mining. He spends most of his time working in the private sector, writing software to automate business tasks, and helping teams use databases to manage their work. He holds a PhD in theatre history from De Montfort University.

Paul Brown is a freelance consultant on computational methods for textual scholarship. He has written software to help determine the authorship of early modern plays and has published non-technical explanations to accompany the code. He teaches undergraduates how to analyse literary and historical data with computers and has taught many sessions to research students and academics on how to program and on text-mining. He spends most of his time working in the private sector, writing software to automate business tasks, and helping teams use databases to manage their work. He holds a PhD in theatre history from De Montfort University.

Nathan Dooner is a PhD student in the School of Humanities at De Montfort University. His research is concerned with the use of computational stylistic

analysis to determine who wrote the plays of Shakespeare's time for which the authorship is uncertain. He previously worked for Intel in Ireland and is fluent in the programming language Python. He runs large-scale experiments comparing the effectiveness of the leading techniques of computational authorship attribution. He has published articles on computational methods in the journal Digital Scholarship in the Humanities.

Nathan Dooner is a PhD student in the School of Humanities at De Montfort University. His research is concerned with the use of computational stylistic

analysis to determine who wrote the plays of Shakespeare's time for which the authorship is uncertain. He previously worked for Intel in Ireland and is fluent in the programming language Python. He runs large-scale experiments comparing the effectiveness of the leading techniques of computational authorship attribution. He has published articles on computational methods in the journal Digital Scholarship in the Humanities.